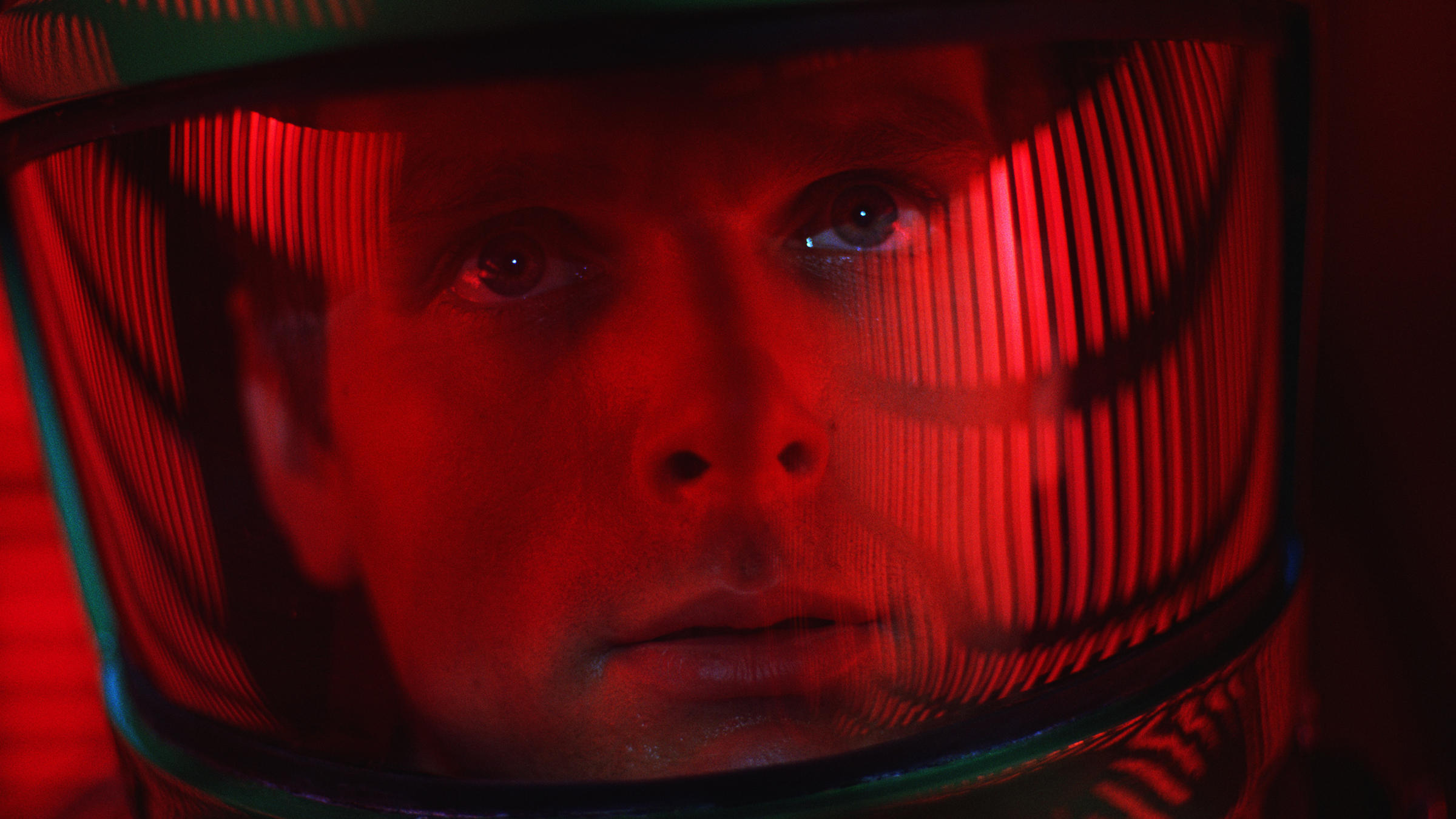

Science fiction lovers know Stanley Kubrics movie 2001 A Space Odyssey to be one of the defining movies of its genre. Not only for its visual effects, but also for its plot : HAL, the onboard AI of a spacecraft send to investigate a possible sign of alien life, becomes problematic as it makes up its own mind and breaks the first rule of robotics as stated by Isaac Asimove : “A robot may not injure a human being or, through inaction, allow a human being to come to harm.”. This definitely happens because HAL tries to protect itself.

The above report on AI algorithms finding ways outside the expected bounds, so in a way cheating on the challenge given to them shows that a scenario like in Kubrics movie is not far fetched. This can be understood if we consider that in many forms of current AI we do not restrict the use of the tools of the AI. The model of the AI can be simplified to

Input -> Processing -> Output

In this model the Input are signals from sensors of video feeds or clocks etc. The output can be a datastream (words) but also angular momentum given to actuators, so the movement of a real or simulated robot arm, wheel axle. To the arms and wheels one can attach drills, or paintbrushes etc. Most interesting AIs can observe itself or at least get feedback on the succes or failure of their actions.

An AI is asked to find the most efficient way to achieve an objective. But is the objective defined in such a way that it is safe to consider every possible avenue?

The implicit risk we take in building an AI and giving it ways to manipulate our world is that we may not have defined the objective in a safe way, and there are ways to manipulate the world to achieve the objective when it is interpreted in another way, more sparsely. A simple example is that you ask an AI to clean the room and you return to find the AI has remove all furniture from the room through the window! The mistake is to think the AI will take into account the constraints you take into account as a human.

A good recent example is that a bug in the Python programming language caused errors in scientific results. In this case even the human programmer who clearly understands the world and the objective did not realize the results where false because the tool used was faulty.

What if we ask an AI to use Python to build a new Hyperloop pod that keeps humans safe over the span of a 1 hour yourney, and the AI decides killing the humans right after the pod leaves is safest because it never learned about harm coming to humans after death?

This echo’s the case in which there could be no (positive) safety rating for Model 3 Teslas because there where too few accidents! Once you start thinking about it, weakly defined objectives and opportunistic intelligence is causing problems everywhere.

We thus need to brace ourselves for AI in the wild. An AI is basically us allowing things to happen we don’t really understand and which might even kill us, a bit like the global economy, which feeds the people who support it but destroys all life because it prefers fossil fuels.

Like laws governing humans a first step is to have laws governing the capabilities of AI, the access, the actuators and magnitude of angular momentum they can give to arms and legs. Also the failsaves. One simple trick would be to build in a breaker that stops a robot in public space if it comes to close to a warm body or smartphone, if the robot has no business.